| Multiple RGB-D Camera-based User Intent Area and Object Estimation System for Intelligent Agent | |

|---|---|

|

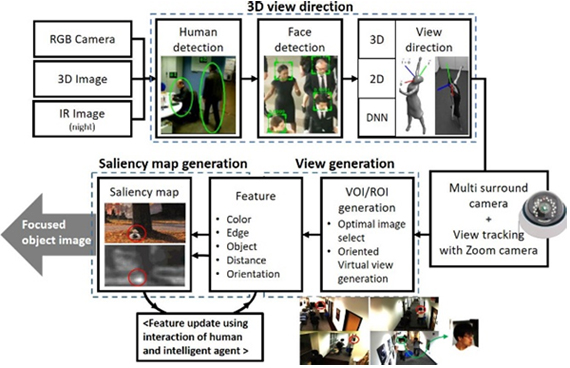

Currently, most AI agents react only when user speaks and give commands to the agent. However, if the region of interest of the person is provided to the AI agent, it can predict the behavioral pattern of the person and provide the proactive interaction services accordingly. The gaze is one of the most important information needed to analyze the behavioral pattern and to represent the current area of interest of the person. Therefore, a lot of methods for estimating human gaze for HCI (Human Computer Interaction) have been studied. In this paper, a technique for user intention and object estimation is proposed for development of the intelligent interactive technology in human and machine cooperative system by utilizing 3D view tracking methods. 3D view tracking method based on multiple RGB-D cameras is implemented with the assumption that the view and face direction are same. Since the proposed method uses multiple RGB-D cameras, it is possible to estimate not only the view direction but also the view area by utilizing both 2D and 3D data without the wearable device in a comparatively large area. First, the RGB-D cameras are placed in such arrangement that the desired view tracking range in achieved considering the 3D measurement range of RGB-D cameras. Then calibration between multiple RGB-D cameras is performed and a full 3D map is generated through a 3D space mapping of the measurement area that all the 3D data is integrated into one coordinate system. If the person is detected in the generated 3D map, the head area of the person is extracted and the 3D view vector is obtained through head pose estimation in the 3D map. Finally, 3D view tracking is implemented by observing the 3D view vector corresponds to which points on the 3D maps. Once 3D view tracking is completed, 3D view points within the 3D map can be calculated. The view point coordinates of the RGB image can be extracted if the RGB and depth images of each RGB-D camera have been calibrated beforehand. In this paper, the view image point is utilized for user intent area and object estimation. If the vicinity of the view image point region is extracted from the RGB image, the extracted image becomes the image of the user's region of interest. Ki Hoon Kwon, Hyun Min Oh, and Min Young Kim, “Multiple RGB-D Camera-based User Intent Position and Object Estimation“, IEEE/ASME International Conference on Advanced Intelligent Mechatronics(AIM),2018 |